Fast Facts

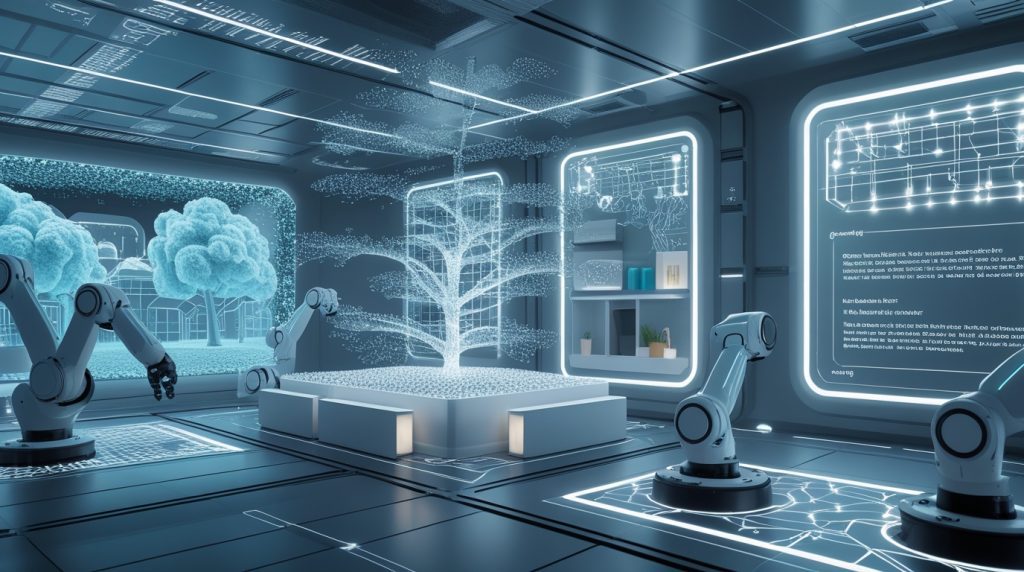

MIT CSAIL’s steerable scene generation creates diverse, physically accurate 3D environments to train robots more effectively, using diffusion models guided by Monte Carlo Tree Search. The system generates complex scenes with up to 34 objects after training on scenes averaging just 17 objects, accurately follows text prompts for specific scene arrangements (98% accuracy for pantry shelves), and creates training data that helps bridge the costly simulation-to-reality gap in industrial robotics.

The robotics data bottleneck

Imagine a warehouse robot consistently failing when encountering an unusually cluttered shelf—not because of hardware limitations, but because its training never included such scenarios. This sim-to-real gap represents one of the most persistent challenges in industrial robotics, where synthetic training environments fail to prepare robots for real-world complexity.

The fundamental issue is data quality, not just quantity. As Nicholas Pfaff, MIT PhD student and lead researcher on the project, explains: “A key insight from our findings is that it’s OK for the scenes we pre-trained on to not exactly resemble the scenes that we actually want. Using our steering methods, we can move beyond that broad distribution and sample from a ‘better’ one—generating the diverse, realistic, and task-aligned scenes that we actually want to train our robots in.”

This insight drives MIT Computer Science and Artificial Intelligence Laboratory’s (CSAIL) latest innovation—steerable scene generation—developed in collaboration with Toyota Research Institute and Amazon. This approach doesn’t just create more training environments; it creates better, more targeted ones that reflect real-world physical constraints and task requirements.

How steerable scene generation works: A technical breakdown

Why current robot training simulations fall short

Traditional robot training faces a difficult choice between costly real-world data collection and limited simulated environments. Collecting demonstrations on physical robots is time-consuming and not perfectly repeatable, while handcrafting digital environments rarely captures real-world physics . Existing procedural generation can produce numerous scenes quickly, but they often lack physical plausibility and don’t represent environments robots would actually encounter .

The steering advantage: Guided environmental creation

Steerable scene generation introduces a fundamental shift—it doesn’t just generate random environments, but intelligently guides the creation process toward specific objectives. The system employs a diffusion model trained on over 44 million 3D rooms filled with models of everyday objects . Unlike image diffusion models that create 2D pictures, this system generates complete 3D environments by progressively refining random noise into coherent scenes through a process called “in-painting” .

The true innovation lies in how researchers “steer” this generation process using three complementary approaches:

- Monte Carlo Tree Search (MCTS): The system treats scene creation as a sequential decision-making process, exploring multiple potential arrangements before selecting the most advantageous path .

- Reinforcement learning post-training: After initial training, the model undergoes fine-tuning with task-specific rewards, learning through trial-and-error to produce scenes that score higher against objectives like maximizing clutter or ensuring physical stability .

- Conditional generation: Users can provide text prompts specifying exact requirements, such as “a kitchen with four apples and a bowl on the table” .

Table: Steering Methods and Their Applications

| Steering Method | How It Works | Industrial Application |

|---|---|---|

| Monte Carlo Tree Search | Explores multiple scene arrangement possibilities before selecting optimal paths | Testing robot performance in edge cases and high-complexity scenarios |

| Reinforcement Learning | Fine-tunes models using reward functions for specific objectives | Generating task-specific environments like maximally-cluttered shelves |

| Conditional Generation | Follows precise text prompts to create specified scene arrangements | Creating standardized test environments across multiple facilities |

Why this matters for industrial robotics

Solving the scalability problem in robot training

For industrial applications, the ability to generate targeted training scenarios at scale addresses several critical bottlenecks:

- Cost reduction: Training robots exclusively in real-world environments requires significant physical infrastructure and risks equipment damage during the learning process. MIT’s approach enables thousands of training iterations without hardware costs or downtime.

- Edge case preparation: Industrial environments inevitably present unusual situations—overflowing bins, irregular object arrangements, or high-priority orders that require non-standard workflows. Steerable scene generation can deliberately create these challenging scenarios to strengthen robot performance.

- Accelerated deployment: As Jeremy Binagia, an applied scientist at Amazon Robotics who wasn’t involved in the paper, notes: “Today, creating realistic scenes for simulation can be quite a challenging endeavor… Steerable scene generation offers a better approach: train a generative model on a large collection of pre-existing scenes and adapt it to specific downstream applications.”

From virtual training to physical performance

The ultimate measure of any simulation technology is how well skills transfer to real operations. The MIT team validated their approach by recording virtual robots performing tasks across generated environments—“carefully placing forks and knives into a cutlery holder, for instance, and rearranging bread onto plates in various 3D settings” . Each simulation appeared fluid and realistic, closely resembling the real-world situations that robots would encounter .

This translation from simulation to reliable real-world performance is the critical foundation for deploying flexible automation in dynamic industrial environments.

Practical applications across industrial sectors

Warehouse and logistics automation

In distribution centers, robots frequently encounter mixed SKUs, variable packaging, and constantly changing inventory layouts. Steerable scene generation can create countless shelf configurations and product arrangements, training robots to handle the full spectrum of picking and placing scenarios they’ll face in actual operations. The technology’s ability to follow precise prompts enables companies to generate environments matching specific facility layouts or seasonal inventory patterns .

Manufacturing and assembly lines

Modern manufacturing increasingly requires flexible automation that can adapt to product variations and mixed-model production. The system’s ability to generate “physically accurate, lifelike environments” makes it particularly valuable for training robots in component handling, kitting operations, and assembly tasks where precise manipulation matters. The guarantee that “a fork doesn’t pass through a bowl on a table” —avoiding the common 3D graphics glitch known as “clipping”—ensures that learned manipulation skills will translate to physical systems.

Healthcare and specialized environments

Though less obvious, healthcare applications like hospital logistics and pharmaceutical handling benefit from this technology. Generating varied hospital room layouts or medication cabinet configurations helps train robots to navigate clinical environments without the disruption or risk of training in actual facilities .

Technical capabilities and performance metrics

The research team conducted rigorous testing to validate their system’s performance across multiple dimensions:

- Prompt adherence accuracy: The system correctly followed user prompts 98% of the time for pantry shelf generation and 86% for messy breakfast tables—at least 10% improvement over comparable methods like MiDiffusion and DiffuScene .

- Complexity scaling: Using MCTS, the system doubled the object count in generated scenes, creating restaurant tables with as many as 34 items after training on scenes averaging only 17 objects .

- Physical feasibility: Through projection and simulation techniques, the system ensures generated scenes obey physical constraints, with objects placed in stable, non-penetrating arrangements .

Table: Performance Benchmarks Against Alternative Methods

| Metric | Steerable Scene Generation | Comparable Methods |

|---|---|---|

| Pantry Shelf Prompt Accuracy | 98% | <88% |

| Messy Breakfast Table Accuracy | 86% | <76% |

| Maximum Objects in Restaurant Scene | 34 items | Limited to training distribution averages |

| Physical Feasibility Enforcement | Guaranteed via projection and simulation | Often limited to classifier guidance |

Research context and development trajectory

This work builds upon the growing recognition that robotics foundation models require massive, diverse training datasets—a challenge the team addressed by distilling procedural data into a flexible generative prior . The research was supported by Amazon and the Toyota Research Institute , highlighting the industrial relevance of the technology.

While presenting a significant step forward, the researchers describe their current work as “more of a proof of concept” , with several planned enhancements:

- Expanded asset generation: Future versions will use generative AI to create entirely new objects and scenes rather than drawing from a fixed library .

- Articulated objects: Incorporating elements that open or twist, like cabinets and jars, will increase the interactive potential of generated environments .

- Real-world integration: The team aims to incorporate real-world objects using libraries pulled from internet images and their previous work on “Scalable Real2Sim” .

Rick Cory, a roboticist at Toyota Research Institute, captures the potential significance: “Steerable scene generation with post training and inference-time search provides a novel and efficient framework for automating scene generation at scale. Moreover, it can generate ‘never-before-seen’ scenes that are deemed important for downstream tasks. In the future, combining this framework with vast internet data could unlock an important milestone toward efficient training of robots for deployment in the real world.”

The strategic importance for industrial robotics transformation

From an industrial AI perspective, steerable scene generation represents more than just a technical achievement—it offers a strategic solution to the fundamental economic challenge of preparing robots for real-world variability. The technology transforms simulation from a limited approximation into a purposeful training tool that can deliberately address known weaknesses and edge cases.

This approach shifts the automation paradigm from training robots for idealized environments to preparing them for operational reality. For industries where reliability directly impacts operational efficiency and cost, this distinction makes the difference between automation as a theoretical capability and automation as a practical solution.

FAQ: Common questions about steerable scene generation

How is this different from traditional simulation methods?

Traditional procedural generation creates numerous scenes quickly but often lacks physical plausibility, while manually crafted environments are physically accurate but time-consuming and expensive to produce. Steerable scene generation combines the scalability of procedural generation with the physical accuracy of manual creation, while adding the crucial ability to direct scene generation toward specific training objectives.

What evidence exists that this improves real-world robot performance?

While the technology is recent, the researchers demonstrated that virtual robots could successfully perform manipulation tasks like placing cutlery in holders and rearranging food items across generated environments. The scenes were immediately simulation-ready and supported “realistic interaction out of the box” , providing a solid foundation for real-world transfer.

How does the system ensure generated scenes are physically plausible?

The system employs a two-stage process: first, a projection technique resolves inter-object collisions by adjusting translations to the nearest collision-free configuration; then, simulation allows unstable objects to settle under gravity, ensuring static equilibrium. This combination guarantees that output scenes obey physical laws.

What are the computational requirements for this technology?

While specific computational requirements aren’t detailed in the available research, the team identified several efficiency strategies in related work, including early stopping when training models (since “about half the electricity used for training an AI model is spent to get the last 2 or 3 percentage points in accuracy” ) and running computing operations during periods when grid electricity comes from renewable sources.

Which companies or organizations supported this research?

The work received support from Amazon and the Toyota Research Institute, indicating recognition of its potential applicability to logistics, manufacturing, and other industrial domains.

The path to more capable industrial robots

MIT’s steerable scene generation technology represents a fundamental advancement in how we prepare robots for real-world deployment. By closing the sim-to-real gap through diverse, physically accurate, and task-specific training environments, this approach addresses one of the most significant barriers to flexible automation in industrial settings.

As this technology matures and incorporates more real-world data and generative assets, it will enable the creation of even more realistic training scenarios—preparing robots not just for the environments we can easily imagine, but for the unpredictable complexity of actual industrial operations.

Further Reading & Related Insights

- How MIT Is Scaling Robot Training Data with Generative AI → A direct companion piece showing how generative models are reshaping robotics training pipelines—especially relevant to steerable scene generation.

- Sim-to-Game-to-Real: The Hidden Shift in Robotics → Explores how simulation fidelity impacts real-world performance, and why game-like environments are becoming essential for industrial robotics.

- Why Domain Randomization in Industrial Robotics Is the Secret Weapon Behind Smarter, More Resilient Automation → Breaks down how randomized training environments improve generalization—an idea that steerable scene generation takes to the next level.

- Robot Esports: Competitive Training—How Sim Training Builds Dexterity & Speed → A creative look at how simulation-based training is evolving into performance benchmarking, with implications for industrial deployment.

- AI-Driven VR Career Training → Shows how immersive, AI-generated environments are being used to train humans—parallel to how steerable scenes train robots.

Industrial AI Insights Newsletter: Get monthly analysis of emerging AI technologies with practical industrial applications. Subscribe now to receive exclusive content on implementing AI-driven automation in your operations.