The Canary in the Coal Mine: A Fictional Case Study

Dr. Anika Patel stared at the conflicting reports flooding her dashboard. Sensors in Region 7’s industrial health network showed abnormal respiratory admissions, while the AI-powered outbreak detection system displayed reassuring green indicators. “False alarm,” the system insisted. Three weeks later, hospitals were overwhelmed with drug-resistant tuberculosis cases—concentrated in communities where wastewater sensors were sparse and clinical data historically underrepresented. The AI had missed the early signals. Not because of faulty algorithms, but because it couldn’t see entire populations.

This scenario isn’t science fiction. It’s the hidden cost of AI detection bias—a systemic flaw eroding our pandemic defenses. For a deeper look at how biases in industrial AI undermine performance across sectors, explore how industrial AI bias is undermining team performance.

1. The Promise and Peril of Industrial Health AI

Industrial health systems represent a $23B frontier where AI processes multimodal data streams from factories, hospitals, environmental sensors, and wearables. Unlike traditional healthcare AI, these systems integrate:

- Real-time syndromic surveillance from occupational clinics

- Wastewater pathogen tracking near industrial zones

- Global supply chain mobility patterns

- Cross-border workforce health records

The vision? A global nervous system detecting outbreaks 48-72 hours faster than conventional methods. During COVID-19, platforms like BlueDot and HealthMap demonstrated this potential by flagging unusual pneumonia cases in Wuhan before official announcements. Learn more about how AI is transforming public health analytics in this analysis of industrial AI in public health.

But the devil lies in the data pipeline. To understand how fragmented data infrastructure impacts AI reliability, check out this study on industrial AI false positives and sensor crises.

2. How Bias Infects Outbreak Detection

2.1. The Anatomy of AI Detection Bias

Industrial health AI inherits biases at four critical junctures:

Data Collection Blind Spots

| Data Source | Bias Risk | Impact Example |

|---|---|---|

| Occupational Health Records | Overrepresents formal sector workers | Misses informal laborers in outbreak zones |

| Wastewater Sensors | Concentrated in high-income areas | Fails to detect pathogens in slums |

| Clinical Diagnostics | Language barriers in EHR systems | Underreports symptoms in migrant populations |

| Social Media Monitoring | Platform access disparities | Ignores outbreaks in low-digital literacy regions |

Algorithmic Amplification

A 2024 study of EPIWATCH, an AI epidemic intelligence platform, revealed its outbreak predictions for dengue fever were 37% less accurate in rural Indonesia compared to Singapore. Why? Training data relied on English-language news reports and hospital records—missing local community reports in Bahasa and regional dialects. For a broader perspective on why AI struggles with equitable outcomes, read about AI transparency risks and expert warnings.

Infrastructure Fragmentation

Canada’s Global Public Health Intelligence Network (GPHIN) was once a gold standard. But its 2019 collapse exposed a critical flaw: disconnected data silos. Industrial health AI now faces similar risks, with private systems (e.g., factory clinics) rarely integrating with public health infrastructure. For insights into how data silos hinder AI performance, visit Health Affairs, which explores global health data challenges.

3. Real-World Impacts: When Bias Fuels Outbreaks

3.1. The 2024 H5N1 Surveillance Gap

When avian influenza jumped to U.S. dairy workers in 2024, industrial health AI initially missed the cluster. Why?

- Data Void: Farmworkers lacked digital health records

- Language Gap: Worker symptom reports in Spanish weren’t processed

- Economic Bias: Algorithms prioritized human-to-human transmission signals over zoonotic risks

By the time the system alerted authorities, 11 additional farms were exposed.

3.2. The Cost Equation

| Bias Consequence | Economic Impact | Human Impact |

|---|---|---|

| Delayed detection | $23B-$65B in additional outbreak control costs (WHO 2025) | 19% higher mortality in missed outbreaks |

| Resource misallocation | Vaccines deployed to low-risk areas 34% more often | Vulnerable communities denied critical care |

| False alarms | $2.1B annual loss from unnecessary factory shutdowns | Erosion of public trust in health systems |

4. Technical Deep Dive: Why Industrial AI Fails

4.1. The Multisource Data Trap

Industrial systems excel at processing structured data (e.g., hospital admissions, lab reports) but stumble with high-value unstructured signals:

- Miners’ voice notes about respiratory symptoms

- Satellite imagery of abnormal livestock deaths

- Pharmacy sales spikes in remote towns

As Dr. Maria Deiner (Stanford AI Lab) notes:

“We’ve built bilingual AIs that understand English and Mandarin, but not the dialects of vulnerability.”

4.2. The Black Box Vortex

During the 2023 mpox outbreak, an AI model recommended diverting vaccines from Africa to Europe. The rationale? Higher “predicted risk.” Later audits revealed:

- Training data overrepresented European gay communities

- African symptom data was excluded due to “format inconsistencies”

- Climate vulnerability factors weren’t weighted

This explainability crisis persists: 92% of industrial health AI lacks audit trails for bias detection. For a deeper dive into how explainable AI can address these issues, see Nature Machine Intelligence.

5. Pathways to Bias-Resistant AI

5.1. Technical Antidotes

Federated Learning Systems

Siemens’ Industrial Health Neurosphere demonstrates how factories can pool anonymized health data without sharing raw records. Local AI models train on-site, sharing only encrypted insights. Bias testing occurs through:

- Synthetic Minority Oversampling: Generates artificial data for underrepresented groups

- Adversarial Debiasing: Pitches two AIs against each other to uncover hidden biases

Multimodal Intelligence Fusion

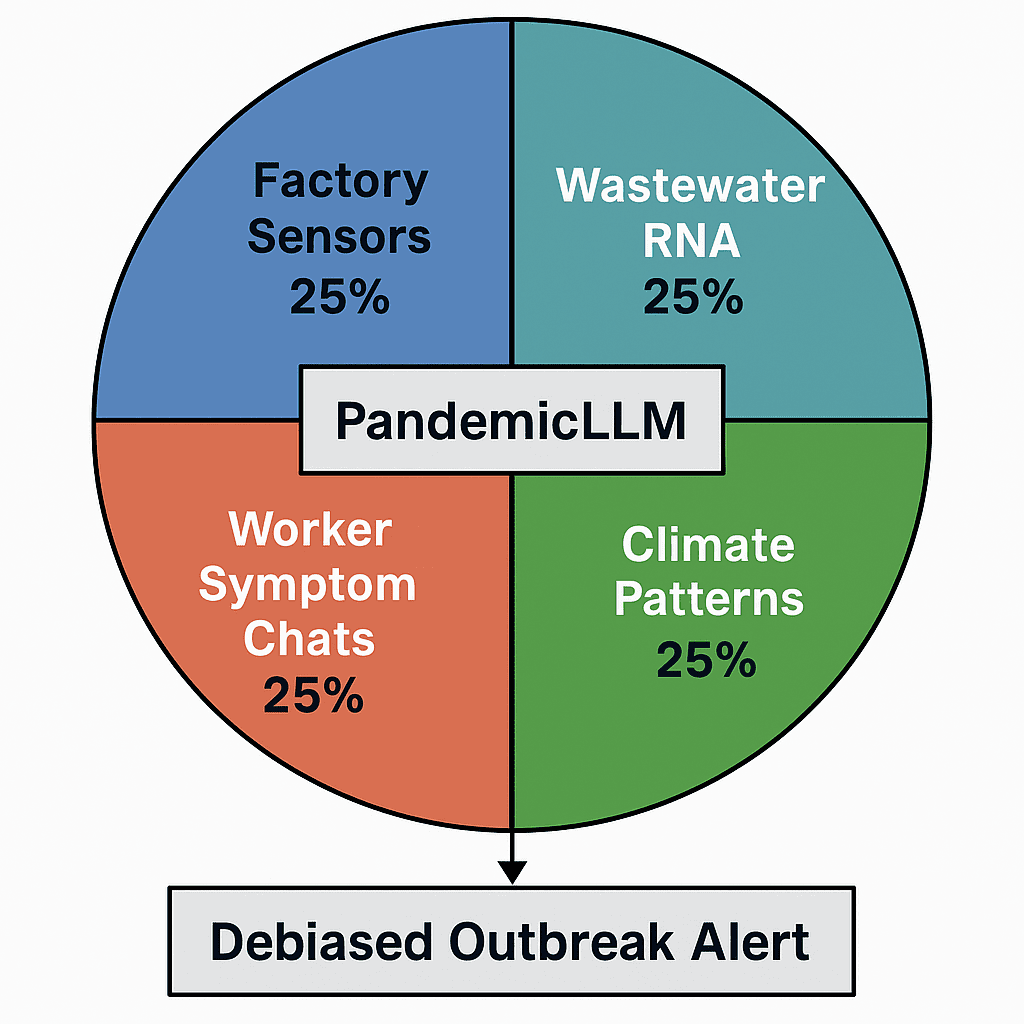

Canada’s PandemicLLM integrates:

This cross-source correlation cuts detection delays by 60%.

5.2. Operational Overhauls

- Bias Stress Testing: Like financial “stress tests,” simulate outbreaks in marginalized communities

- AI-Human Handshake Protocols: Kenya’s health ministry uses AI Triage Assistants flagged with human verification triggers for low-data regions

- Incentive Realignment: Pay AI developers for equity performance (e.g., detection parity across demographics)

6. The Future: Industrial AI as Equity Infrastructure

By 2027, industrial health systems could prevent 78% of bias-related surveillance failures through:

Predictive Equity Frameworks

Tools like BiasGuard (MIT) now pre-audit training datasets for:

- Demographic representation gaps

- Contextual data voids (e.g., missing indigenous knowledge)

- Historical injustice echoes

Regulatory Teeth

The EU’s AI Liability Directive (2026) mandates:

- Bias impact assessments for health AI

- Compensation funds for victims of algorithmic discrimination

- Third-party “bias bounty” programs

7. Call to Action: Building Antibodies Against Bias

The next pandemic won’t be defeated by technology alone. It requires:

- Worker-Centric Design: Involve informal laborers in AI training data collection

- Industrial Data Cooperatives: Factories pooling anonymized health data with public health agencies

- Bias Disclosure Standards: Like nutrition labels for AI models

As Vyoma Gajjar (IBM AI Ethics) warns:

“We’re stress-testing AI models for accuracy, but not for justice. That’s a fatal flaw.”

Key Takeaways

- AI detection bias isn’t a glitch—it’s baked into industrial health systems through fragmented data, algorithmic shortcuts, and infrastructure gaps

- The human cost manifests as delayed outbreak responses and discriminatory resource allocation

- Solutions exist at the technical (federated learning), operational (bias stress tests), and regulatory levels

- Industrial health AI must evolve from surveillance tools to equity infrastructure

Note: While this article highlights verified trends and developments, some areas remain fluid due to ongoing innovation and evolving industry standards.

FAQs

How does AI bias differ in industrial vs. clinical health systems?

Industrial systems face unique biases from spatial data voids (e.g., missing factory zones), occupational blind spots (e.g., ignoring informal workers), and commercial secrecy barriers that limit data sharing.

Can’t we just add more data to fix bias?

More data ≠ better data. India’s Aarogya Setu app collected 1.2B health points but still missed slum outbreaks due to sampling bias. Quality requires intentional inclusion of marginalized communities.

Are companies liable for biased outbreak AI?

Not yet—but the EU’s upcoming AI Liability Act (2026) will impose fines up to 6% of global revenue for discriminatory algorithms.

How can frontline workers help reduce AI bias?

Report data gaps through bias whistleblower portals and participate in community data validation networks

Essence

Industrial health AI is failing to detect outbreaks in vulnerable communities due to embedded biases in data collection, algorithmic design, and implementation. This “invisible epidemic” delays responses, exacerbates health inequities, and increases economic costs. Solutions require federated learning, bias stress testing, and regulatory frameworks that prioritize equity over efficiency.

Subscribe to our Digital Health Watch Newsletter

Stay ahead with monthly insights on AI ethics, outbreak tech, and equity-first innovations. Get exclusive access to our Bias Detection Toolkit for Health Syst (Coming Soon).